Back in March, we wrote about our plans to conduct experiments on Snapshot Serengeti, showing users more of the species they seem to be most interested in, in the hope that they would stay longer and classify more images.

We’ve now run our first “happy user” experiment doing just that, and in this post we will outline the preliminary results. But first, let’s review how the experiment worked:

- For each user, we worked out the species they were most interested in, by looking at which images they had favourited, shared, or in which they had otherwise expressed interest, in the past.

- Each user was assigned to either the “control” cohort, or the “interesting” cohort.

- Users in the control cohort were presented 50 random images to classify, just as is normal on the project.

- Users in the interesting cohort were presented with 20 images known to contain their two most liked species, and 30 random images.

- Each user was presented their images in a random order.

We then compared the two cohorts based on:

- how many images they classified before leaving the site

- how long they stayed on the site (“session length”)

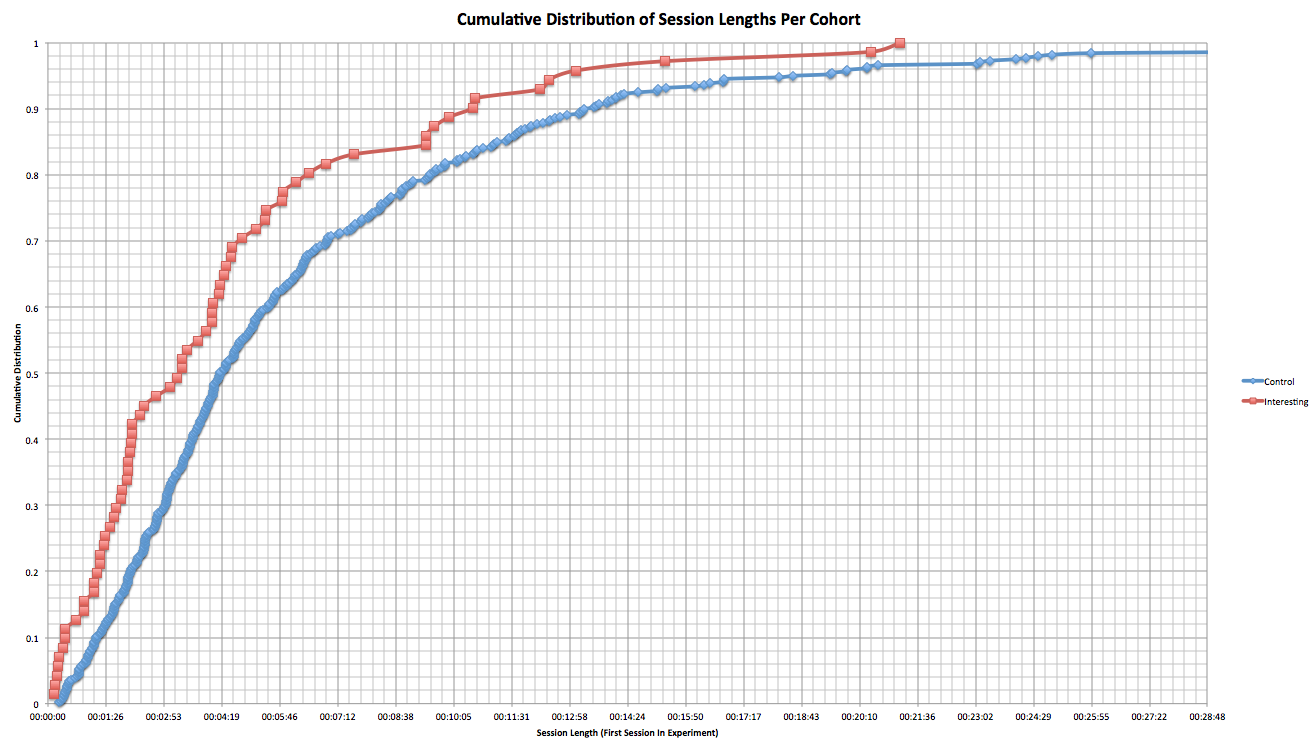

We found something very strange. The “interesting” cohort seemed to spend less time on the site:

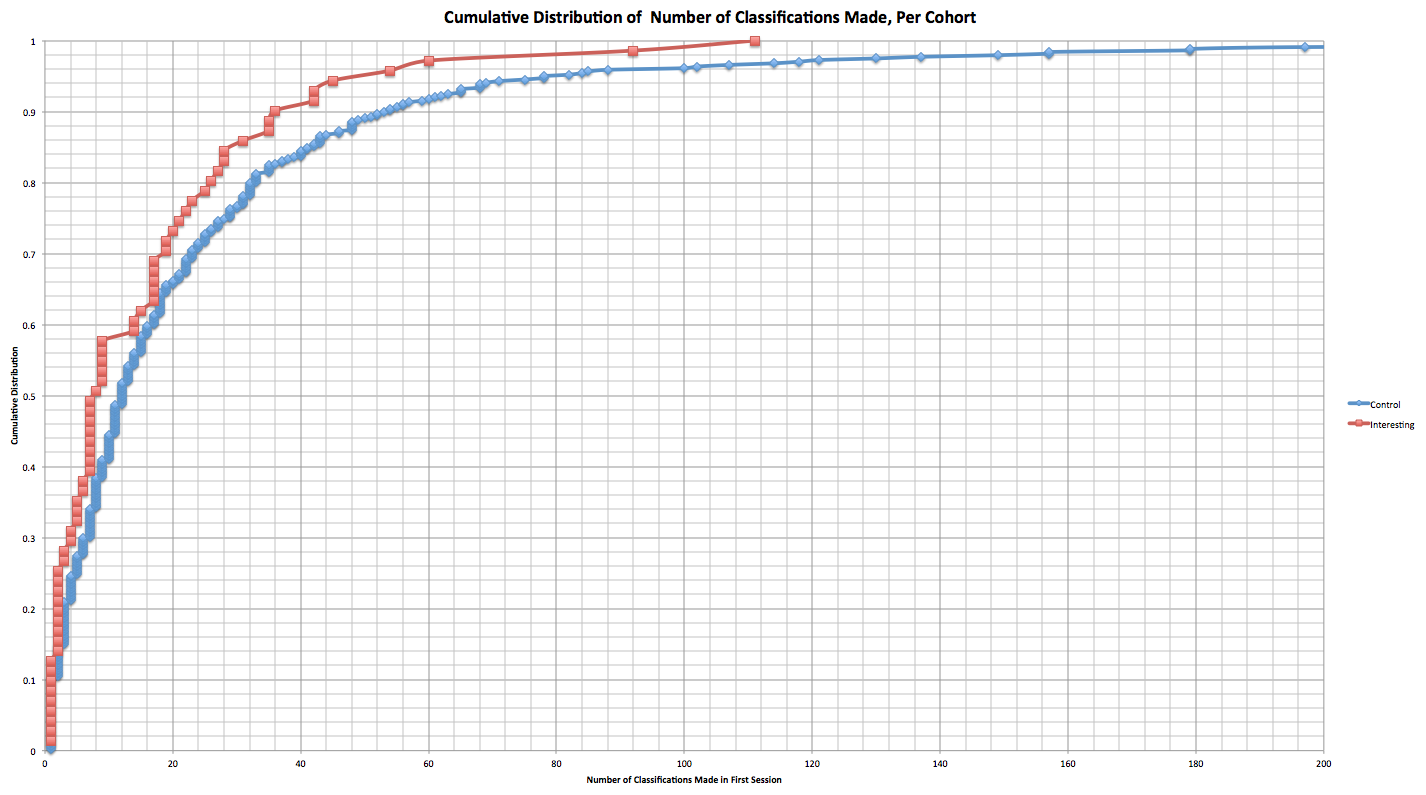

And they classified fewer subjects than those in the control cohort:

This was surprising and counter-intuitive, the very opposite of the result we had hoped for. We thought that giving people more of the animals they liked would increase their engagement, but it seems the opposite effect happened.

In trying to explain this, we began to wonder: Could it be that by changing the set of images a user is presented with, we had somehow removed something that encourages them to stay longer?

One theory that came up was that the number of “blank” images – that is, images that contain no animals – might have an effect. We know that around 70% of our images are blank, but we were not quite sure what effect – positive or negative – the blanks have on user behaviour.

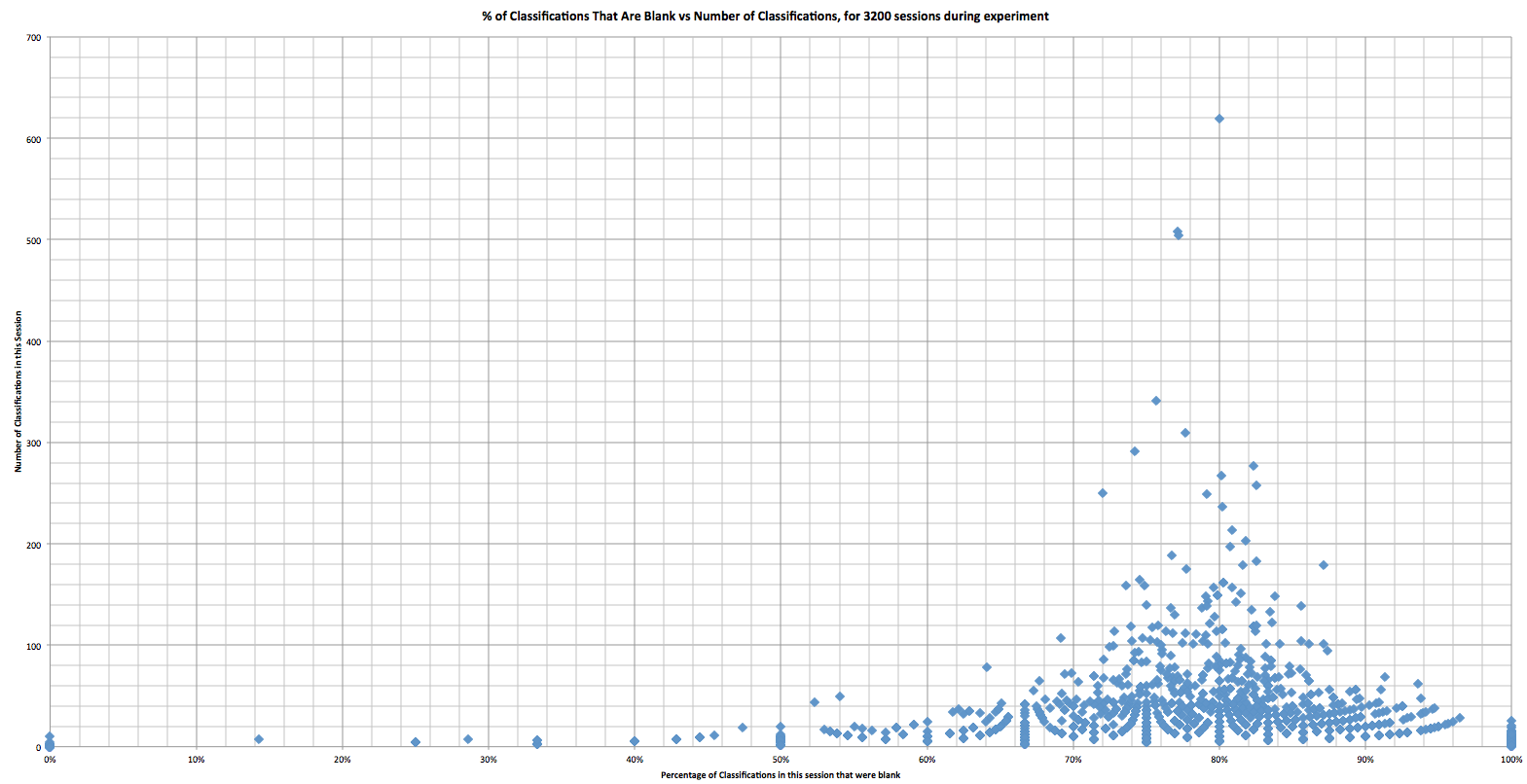

So we looked at all user sessions across the experiment, and figured out what percentage of the subjects seen were in fact blank. The distribution looks like this:

And here we have something very interesting: It seems that the users who made the most classifications cluster around the 80% mark – in other words, if 80% of images you see are blank, you are much more likely to stay longer and classify more images*. This is very interesting, and suggests that the reason the interesting cohort stayed less time is because by inserting 40% of the images which are definitely not blank, that means the likely percentage of blanks seen by the interesting cohort would be about 42% (70% of 60%) – considerably below the “sweet spot” required for maximum participation.

* Note: Saying that an image is blank still counts as a classification.

There are many interesting implications of this finding:

- It may be that the make-up of the subject set presented to a user has more of an effect than the properties of those images themselves. Future experiments will need to construct image sets very careful – for example, to properly assess whether “interestingness” of the animals has an effect, we need to ensure every participant gets the same percentage of blanks.

- We had identified a need to predict which images don’t contain animals. This will now become even more important – not to remove those images, as we had thought, but to insert them strategically in order to get the most out of users.

- Careful consideration will need to be given as to how we spend our users’ time; inserting known blank images to yield more classifications is only justifiable if the classifications gained exceed the classifications lost by classifying blank images we already know about. One thing we are always conscious of at the Zooniverse is not wasting our users’ valuable time!

We will now look to verify this finding in our historical classification data, and will use our findings to better inform the design of our next experiments with Snapshot Serengeti and with MICO. We will conduct further analysis taking into account this factor, by only comparing users who have seen the same percentage of blank images.

The other interesting question is why does seeing 80% blanks yield a greater level of participation. At this point we can only speculate, but it could be that too few blanks makes the task seem more arduous, more boring, and if you never see any animals, you also get bored – but at a certain level, the blanks give space for anticipation, and the finding of animals stands out more and becomes more rewarding; the differential between blanks and animals intensifies the moment.